Introduction

Bots can be helpful for indexing your website, but malicious or spam bots can slow down your server and expose vulnerabilities. In this guide, we'll show you how to block unwanted bots on your web server effectively using .htaccess, server settings, and firewalls.

1. Why Block Bots?

- Protect server resources

- Reduce spam and fake traffic

- Improve website performance and speed

- Enhance web server security and integrity

2. Identify Bad Bots

Check your server logs or use tools like Google Analytics, Cloudflare, or AWStats to identify suspicious user agents or repeated IPs. Common bad bots include scrapers, brute-force attackers, and spam crawlers.

3. Methods to Block Bots

- .htaccess Rules: Use

RewriteCondandRewriteRuleto deny access based on user-agent strings. - robots.txt: Specify rules to allow or disallow bots.

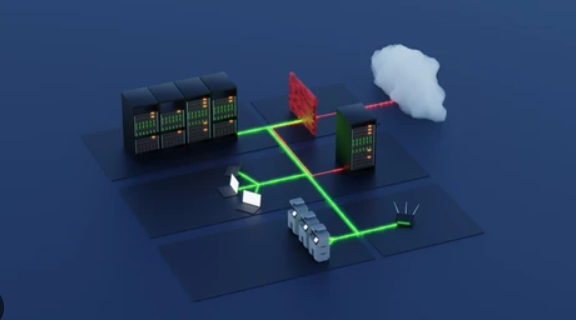

- Firewall/IP Filtering: Block IP ranges or geographic locations.

- Web Application Firewalls (WAF): Use tools like ModSecurity.

4. Monitor & Update Regularly

Blocking bots isn’t a one-time task. Keep your block lists and filters updated. Consider using automated bot protection solutions or security plugins.

Conclusion

By taking proactive steps to detect and block bad bots, you can secure your website, improve performance, and protect your content from scraping or abuse. Always balance between allowing helpful bots (like Googlebot) and denying harmful ones.